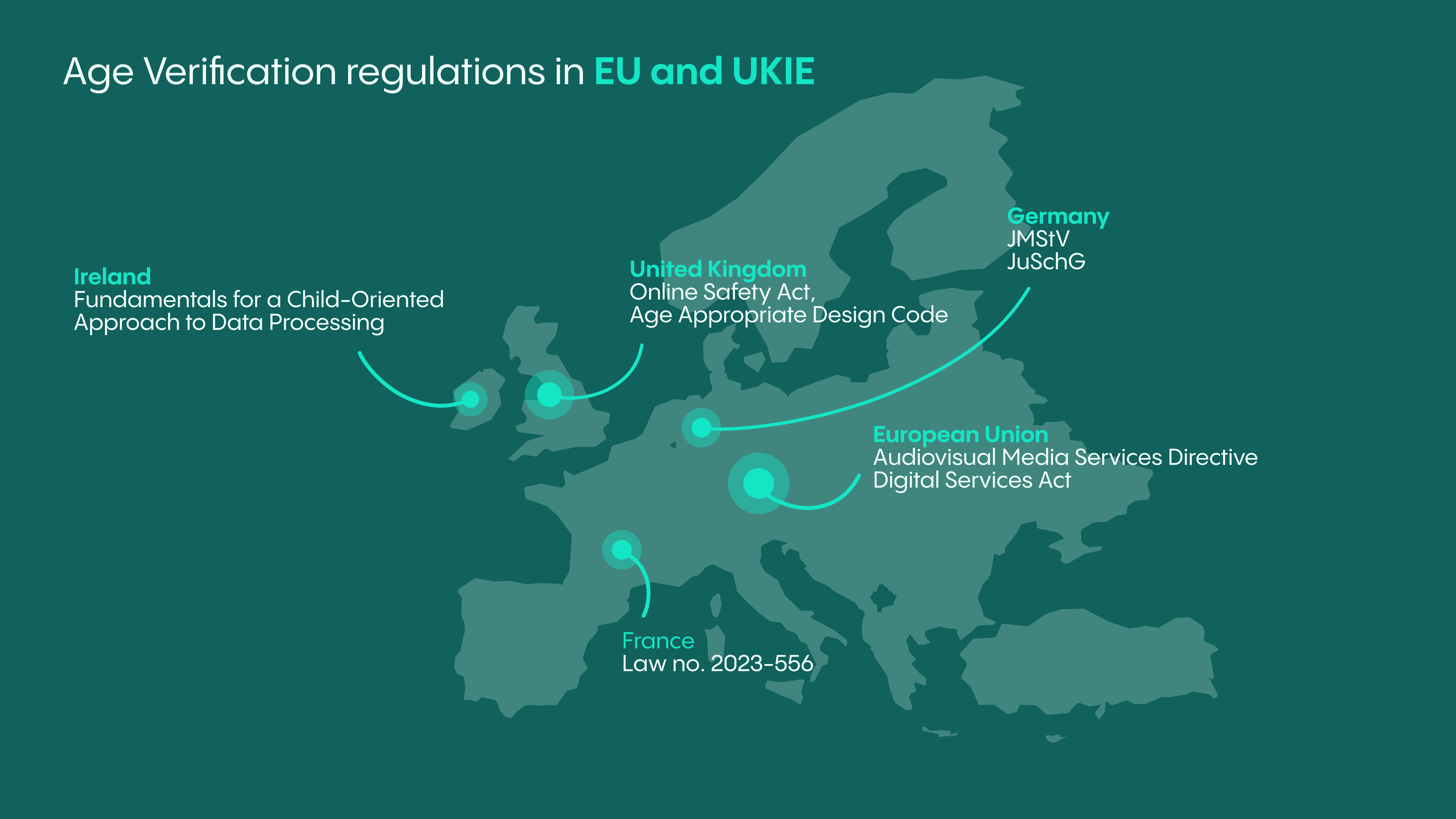

Regulaciones de verificación de edad en la UE y el Reino Unido

Varios países están introduciendo legislación para proteger a los niños de acceder a contenido dañino en línea. A nivel de la UE y el Reino Unido, también hay esfuerzos crecientes en este sentido: los gobiernos han promulgado nuevas regulaciones para proteger a los niños de un Internet peligroso. Aquí están algunas de las leyes más recientes y con mayor impacto.

Dmytro Sashchuk

En un mundo cada vez más digital, la protección de los menores contra contenido dañino es de suma importancia para los gobiernos de todo el mundo. Si bien la extensión de la exposición de los menores a contenido inapropiado varía entre los países, el Informe EU Kids Online 2020 reportó un promedio del 33% de los niños de 9 a 16 años que han visto imágenes sexuales. El mismo estudio descubrió que alrededor del 10% de los niños de 12 a 16 años consume contenido dañino al menos una vez al mes. Los datos demuestran que los gobiernos y reguladores que buscan proteger a los jóvenes tienen argumentos muy sólidos para perseguir leyes que exigen la implementación de soluciones de verificación de edad para minimizar la exposición dañina de los niños a contenido para adultos.

Como resultado, la verificación de edad se ha convertido en uno de los temas más candentes en el ámbito de la verificación de identidad hoy en día, ya que las jurisdicciones a nivel mundial están intensificando las restricciones al acceso libre de contenido perjudicial para la juventud, limitando al mismo tiempo la recolección de datos de usuarios menores de edad. Esto es evidente por los esfuerzos de los reguladores de los países para señalar a las empresas la urgencia de implementar sistemas de verificación de edad apropiados; algunos ejemplos incluyen al Reino Unido, Alemania, España y Francia. Desde plataformas de redes sociales hasta servicios de streaming, las empresas en línea de todos los sectores deben cumplir con los crecientes requisitos de verificación de edad para satisfacer sus obligaciones de cumplimiento. Esto también ayuda a construir confianza entre los usuarios del servicio y a mejorar la reputación de la marca.

Por lo tanto, para evitar multas severas u otras responsabilidades, las empresas en línea deben considerar seriamente usar tecnologías de verificación de edad que permitan a los usuarios verificar su edad de manera fácil y conveniente y asegurar que solo los adultos puedan acceder a contenido para adultos. No cumplir con los requisitos de verificación de edad puede acarrear graves consecuencias legales y un enorme daño reputacional para la marca. Las empresas no cumplidoras corren el riesgo de perder clientes si no cumplen con las leyes y no protegen a los niños.

Con un sólido conocimiento en el marco legislativo, nuestros expertos legales también están perfectamente posicionados para ayudarle a determinar las regulaciones más importantes, aplicables y próximas en materia de verificación de edad de la UE y el Reino Unido. Al profundizar en los detalles de las leyes de verificación de edad en varios países, se hace evidente que, si bien el objetivo general permanece constante: proteger a los menores, los enfoques pueden variar. Examinemos cómo las jurisdicciones abordan este problema crítico dentro de sus marcos legales.

1. Unión Europea

Directiva de Servicios de Medios Audiovisuales

Resumen de la regulación

La Directiva de Servicios de Medios Audiovisuales de la UE (AVMSD) regula la coordinación a nivel de la UE de la legislación nacional sobre todos los medios audiovisuales, tanto transmisiones de TV tradicionales como servicios bajo demanda. La naturaleza de este acto legal es tal que solo crea herramientas para que los Estados miembros generen un campo de juego efectivo y (algo) armonizado para todos los proveedores de servicios de medios audiovisuales de la UE. En términos prácticos, la AVMSD es la razón subyacente por la que muchos países de la UE han implementado regulaciones que exigen medidas de restricción de edad, ya que es una de las herramientas más efectivas para prevenir el acceso de los menores a materiales dañinos. La última revisión de la AVMSD se llevó a cabo en 2018.

Aplicabilidad:

Si bien la AVMSD no crea obligaciones directas para los proveedores de servicios por sí sola, establece los objetivos que cada Estado miembro debe alcanzar alineando su ley nacional con los objetivos destacados en la AVMSD. Prácticamente, significa que la AVMSD tiene un efecto indirecto en los proveedores de servicios relevantes, pero se aplica directamente a los Estados miembros que requieren que generen medidas para los proveedores de servicios audiovisuales de la UE.

Algunos requisitos notables para cada Estado miembro incluyen:

- Asegurar que un proveedor de servicios de medios bajo su jurisdicción haga que la información básica de la empresa sea fácilmente, directamente y permanentemente accesible para los usuarios finales.

- Asegurar que los servicios de medios audiovisuales prestados en su jurisdicción no contengan ninguna incitación a la violencia o al odio dirigidas contra un grupo de personas o un miembro de un grupo y/o provocación pública para cometer un delito terrorista.

- Asegurar que los servicios de medios audiovisuales bajo su jurisdicción, que puedan perjudicar el desarrollo físico, mental o moral de los menores, solo estén disponibles de tal manera que garantice que los menores no los verán ni oirán normalmente.

- Asegurar que los servicios de medios audiovisuales bajo jurisdicción se hagan continuamente más accesibles a personas con discapacidades mediante medios proporcionales.

Enlace:

Directiva de Servicios de Medios Audiovisuales 2010/13/UE

Estado

- La AVMSD original fue firmada como ley: el 10 de marzo de 2010

- La AVMSD original ha sido aplicable desde el 5 de mayo de 2010

- La AVMSD modificada ha sido aplicable desde el 18 de diciembre de 2018

Exenciones, si las hay:

Las exenciones son generalmente determinadas por los Estados miembros.

Sanciones aplicables

Impuestas a nivel nacional

Autoridad supervisora:

Cada Estado miembro debe designar uno o más organismos reguladores nacionales. Además, a nivel de la UE, se creó el Grupo de Reguladores Europeos para Servicios Audiovisuales (ERGA) con la tarea, entre otras, de garantizar la implementación consistente de la AVMSD.

Reglamento (UE) 2022/2065 del Parlamento Europeo y del Consejo de 19 de octubre de 2022 sobre un Mercado Único para Servicios Digitales y por el que se modifica la Directiva 2000/31/CE (Ley de Servicios Digitales o DSA)

Resumen de la regulación:

La Ley de Servicios Digitales, comúnmente conocida como DSA, es uno de los productos más recientes e impactantes de los legisladores de la UE en el ámbito de la prevención de actividades ilegales y dañinas en línea. La DSA, entre otros, regula plataformas de redes sociales, incluidos redes (como Facebook) o plataformas de intercambio de contenido (como TikTok), tiendas de aplicaciones y mercados. Los requisitos bajo la DSA se pueden desglosar en niveles. Si bien hay un nivel “general” que se aplica a todas las entidades reguladas, otros requisitos se aplican dependiendo de la naturaleza del servicio prestado.

Aplicabilidad:

La DSA se aplica a los servicios intermedios que proporcionan servicios de la sociedad de la información, tales como un servicio de ‘simple conducto’, un servicio de ‘caché’ o un servicio de ‘hosting’.

Es decir, esto incluye lo siguiente:

- Proveedores de acceso a Internet,

- servicios de alojamiento como computación en la nube y servicios de alojamiento web,

- registradores de nombres de dominio,

- mercados en línea,

- tiendas de aplicaciones,

- plataformas de economía colaborativa,

- redes sociales,

- plataformas de intercambio de contenido,

- plataformas de viajes y alojamiento en línea

Como se mencionó, los efectos de la DSA están estructurados dependiendo de la naturaleza del servicio que prestan los intermediarios. La primera línea (es decir, 'requisitos generales') se aplica a todos los servicios intermediarios mencionados anteriormente. Algunos requisitos generales notables incluyen:

- Todos los servicios intermedios deben designar un único punto de contacto para comunicarse con las autoridades de la UE y con los receptores del servicio.

- Todos los servicios intermedios deben hacer que los informes sobre las prácticas de moderación de contenido que emplean sean públicos y fácilmente accesibles en un formato legible por máquinas.

La segunda línea de requisitos es única para un servicio específico, dependiendo de si el servicio intermedio se refiere a un alojamiento o la provisión de una plataforma en línea o mercado en línea:

Para los servicios de alojamiento, los requisitos notables incluyen:

- Implementar un ‘mecanismo de aviso y acción’ en el que los usuarios puedan señalar contenido ilegal para que el proveedor de servicios lo elimine.

- Proporcionar una ‘declaración de razones’ a cualquier usuario cuya información sea restringida por el proveedor de servicios debido a ilegalidad.

Para plataformas en línea, los requisitos notables incluyen:

- Priorizar las notificaciones de contenido ilegal por parte de ‘informantes de confianza’ y suspender a los destinatarios del servicio que hagan un mal uso de la plataforma al proporcionar contenido ilegal o enviar quejas manifiestamente infundadas.

- Asegurar la transparencia con respecto a cualquier publicidad en la plataforma o cualquier sistema de recomendación utilizado por la plataforma.

- Implementar medidas para garantizar un alto nivel de privacidad, seguridad y protección de los receptores de servicios que son menores de edad

Para mercados en línea, los requisitos notables incluyen:

- Obtener y mostrar a los consumidores detalles de los comerciantes que utilizan sus servicios para comercializar sus bienes/servicios.

- Diseñar sus interfaces en línea de manera que facilite a los comerciantes cumplir con sus obligaciones respecto a la información precontractual, cumplimiento e información de seguridad del producto bajo la ley de la UE.

- Informar a los consumidores que se les ha ofrecido un producto/servicio ilegal sobre la ilegalidad y posibles medios de reparación.

Finalmente, la DSA tiene requisitos separados que se aplican a entidades particularmente grandes designadas como Plataformas En Línea Muy Grandes o Motores de Búsqueda Muy Grandes. Sus requisitos notables incluyen:

- Evaluar, mitigar y responder a los riesgos emergentes del uso de sus servicios.

- Asegurar el cumplimiento de la DSA implementando auditorías independientes, proporcionando datos solicitados a los reguladores y estableciendo un oficial de cumplimiento.

- Pagar una tarifa de supervisión a la Comisión Europea para cubrir sus costos de supervisión.

Enlace

Estado

Entró en vigor el 16 de noviembre de 2024;

Comenzó a aplicarse el 17 de febrero de 2024 (excepto para algunos artículos designados que entrarán en vigor en fechas posteriores).

Sanciones aplicables:

La cantidad precisa de las multas se determina por la autoridad nacional designada como supervisora bajo la DSA y puede variar de país a país. Sin embargo, la DSA limita la cantidad máxima de multas que se pueden imponer por incumplimiento de los requisitos de la DSA, a saber:

- 6% de la facturación anual mundial en el año financiero anterior por incumplimiento de una obligación bajo la DSA;

- 1% de los ingresos anuales o de la facturación anual mundial en el año financiero anterior por suministrar información falsa, incompleta o incorrecta o por no someterse a inspección; y

- 5% de la facturación o ingresos diarios promedios a nivel mundial por día como penalización periódica.

Autoridad supervisora:

Cada país de la UE ha sido encargado de designar la autoridad responsable de la supervisión y aplicación de la DSA. La información más actualizada sobre la designación de autoridades nacionales se puede encontrar aquí.

Por otro lado, las Plataformas Muy Grandes y Motores de Búsqueda Muy Grandes son supervisados directamente por la Comisión de la UE.

Alemania

Tratado Interestatal sobre la Protección de la Dignidad Humana y la Protección de Menores en los Medios

Resumen de la regulación:

El marco legal alemán, aunque es uno de los más antiguos, es quizás uno de los más completos en lo que se refiere a la protección de los menores en los medios. El Tratado Interestatal sobre la Protección de la Dignidad Humana y la Protección de los Menores en los Medios (JMStV) y la Ley Federal sobre la Protección de Menores (JuSchG) forman la columna vertebral para las protecciones que buscan salvaguardar a los niños y adolescentes al exigir a los proveedores de medios que impongan restricciones de edad en contenido que pueda afectar el desarrollo normal de los niños. El JMStV es notable porque marcó la formación de la Comisión para la Protección de Menores en los Medios (conocida como KJM). También otorgó a la KJM el poder de establecer un marco para la acreditación de soluciones de verificación de edad, como Veriff.

Aplicabilidad:

El JMStV se aplica a proveedores de medios, ya sean emisores o proveedores de servicios telemáticos. Para reflejar el rápido desarrollo tecnológico en esta esfera y para incorporar los requisitos de la AVMSD, el alcance de las empresas que deben cumplir con el JMStV se amplió para incluir servicios de intercambio de videos en 2020.

Requisitos notables del JMStV incluyen:

- Prevenir o bloquear cualquier contenido considerado ilegal bajo el Código Penal Alemán como se especifica en los Artículos 4(1) y (2) del JMStV.

- Asegurar que el contenido dañino para los menores esté disponible solo para la categoría de edad apropiada de usuarios, mientras que otros grupos de edad estén bloqueados para acceder a él mediante medios adecuados.

- Para plataformas de intercambio de videos que suministran contenido dañino para el desarrollo de menores, introducir y aplicar sistemas de verificación de edad autorizados por la KJM y habilitar controles parentales.

- Asegurar que la publicidad conforme a las restricciones de contenido al igual que el servicio en sí.

- Para proveedores de servicios de televisión de pago que involucren contenido dañino, nombrar un responsable de protección infantil.

- Asegurar el cumplimiento con las restricciones de horarios de transmisión donde el contenido proporcionado pueda impactar negativamente a los niños.

Enlace

Estado

En vigor desde 2003

Exenciones, si las hay

No es aplicable, sin embargo, el JMStV permite ciertas derogaciones autorizadas por la KJM o las autoridades de medios de los estados federales alemanes (ver Artículo 9 del JMStV).

Sanciones aplicables:

La KJM puede hacer cumplir el JMStV impidiendo el acceso de los usuarios alemanes a servicios no cumplidores. Los proveedores de servicios que violen los requisitos del JMStV pueden ser encarcelados o multados con hasta 300,000 EUR.

Autoridad supervisora:

KJM

Ley Federal de Protección de Menores

Resumen de la regulación:

La Ley Federal de Protección de Menores (JuSchG), aunque es más amplia que el JMStV, también contiene requisitos relevantes para las empresas en cuanto a la protección de menores. La principal diferencia radica en el hecho de que el JuSchG impone requisitos de precaución para garantizar experiencias digitales seguras para los menores, particularmente en el entorno digital.

Aplicabilidad:

El JuSchG es bastante más amplio que el JMStV y contiene requisitos que pueden ser relevantes no solo para los proveedores de servicios de medios, sino también para otras empresas. Por ejemplo, esta ley contiene restricciones sobre la asistencia de menores a restaurantes y eventos de baile públicos y prohibiciones de consumo de alcohol; posteriormente, estas restricciones deben ser aplicadas por cualquier negocio que organice tales actividades. Al mismo tiempo, el JuSchG contiene disposiciones que son relevantes específicamente para los proveedores de medios.

Requisitos notables del JuSchG incluyen:

- Para películas y juegos incluir una marca obligatoria aprobada por las autoridades estatales o organizaciones de autorregulación autorizadas y garantizar que sean liberadas solo a grupos de edad permitidos.

- Para distribuidores de medios que organizan eventos públicos de cine (es decir, cines) restringir la asistencia del evento solo a los grupos de edad permitidos.

- Imponer restricciones sobre los portadores de medios que están etiquetados como “No aprobados para jóvenes”, es decir, prohibición de ordenarlos por correo o distribuirlos a través de medios donde los clientes puedan obtenerlos sin ningún control.

- Además, el JuSchG prohíbe incluir medios que la autoridad supervisora haya determinado que son dañinos para los menores en portadores de medios.

Enlace

Estado

En vigor desde 2003

Exenciones, si las hay

No es aplicable; sin embargo, el JuSchG permite ciertas derogaciones únicas dependiendo de la restricción.

Sanciones aplicables:

Los proveedores de servicios que violen el JuSchG corren el riesgo de recibir no solo multas administrativas que van de 50,000 EUR a 5,000,000 EUR, sino que también están sujetos a sanciones penales que incluyen encarcelamiento

Autoridad supervisora:

La Agencia Federal de Pruebas para Medios Dañinos para Menores

Francia

Ley n.º 2023-556

Descripción general de la regulación: La ley impone un conjunto de obligaciones a los proveedores de servicios de redes sociales en línea con el objetivo de proteger a los niños de los efectos negativos de la exposición a internet y salvaguardar el entorno digital del acoso cibernético.

Aplicabilidad: La ley impone los siguientes requisitos a los proveedores de servicios de redes sociales en línea (es decir, plataformas de redes sociales) que operan en Francia.

Algunos requisitos notables incluyen:

- rechazar el registro de menores de 15 años para sus servicios, a menos que se haya obtenido la autorización de un padre o tutor;

- para las cuentas ya existentes se aplican las mismas condiciones, lo que significa que se debe obtener la autorización expresa de un padre o tutor lo antes posible;

- un padre o tutor debe poder pedir al servicio de redes sociales en línea que suspenda la cuenta de su hijo menor de 15 años;

- el servicio de redes sociales en línea debe verificar la edad de sus usuarios menores de edad y de los titulares de la autoridad parental. Para este propósito, deben utilizar soluciones técnicas que cumplan con un marco de referencia publicado por la Autoridad Reguladora para la Comunicación Audiovisual y Digital (Arcom) consultando a la Comisión Nacional de Informática y Libertad (CNIL).

Estado:

- La Ley n.º 2023-556 fue firmada como ley el 7 de julio de 2023.

- La ley no. 2023-556 entrará en vigor en la fecha que se especificará mediante un decreto legislativo.

Exenciones, si las hay: Las obligaciones no se extienden a enciclopedias en línea sin fines de lucro y/o a directorios educativos y/o científicos sin fines de lucro.

Sancciones aplicables: Multas de hasta el 1% del volumen de negocios global anual de la empresa.

Reino Unido

Ley de Seguridad en Línea

Descripción general de la regulación: La Ley de Seguridad en Línea es un conjunto de regulaciones para proteger a niños y adultos de contenido ilegal y dañino en línea. Hace que los proveedores de servicios de internet y los proveedores de motores de búsqueda sean más responsables de la seguridad de sus usuarios en sus plataformas.

Aplicabilidad: La Ley de Seguridad en Línea impone requisitos legales a:

- proveedores de servicios de Internet que permiten a los usuarios encontrar contenido generado, cargado o compartido por otros usuarios;

- proveedores de motores de búsqueda que permiten a los usuarios buscar múltiples sitios web y bases de datos; y

- proveedores de servicios de Internet que publican o exhiben contenido pornográfico (significando contenido pornográfico publicado por un proveedor).

Así como los proveedores de servicios del Reino Unido, la ley se aplica a proveedores de servicios regulados con sede fuera del Reino Unido donde caigan dentro del alcance de la ley.

Las plataformas deberán:

- eliminar contenido ilegal,

- prevenir que los niños accedan a contenido inapropiado para su edad,

- hacer cumplir límites de edad y medidas de verificación de edad,

- proporcionar a los padres y a los niños formas claras y accesibles para informar problemas en línea cuando surjan.

El 5 de diciembre de 2023, OFCOM publicó pautas sobre las medidas de aseguramiento de edad que los servicios en línea que publican contenido pornográfico deben implementar para cumplir con la Ley de Seguridad en Línea. La guía proporciona un valor significativo a los proveedores de servicios, ya que uno de los temas abordados se refiere a los sistemas de verificación de edad efectivos que pueden ser utilizados por servicios en línea para cumplir con sus obligaciones bajo la ley. Por ejemplo, estos incluyen sistemas de emparejamiento de identificación fotográfica que utilizan documentos de identidad emitidos por el gobierno, como tarjetas de identificación o licencias de conducir, o estimación de edad facial que estima la edad analizando características faciales.

Enlace: Ley de Seguridad en Línea

Estado: La ley recibió sanción real y se convirtió en ley el 26 de octubre de 2023.

Sancciones aplicables: Multas de hasta £18 millones o el 10% del volumen de negocios global anual, lo que sea mayor. Acción penal contra altos directivos.

Autoridad supervisora:

La Oficina de Comunicaciones (OFCOM)

Código de Diseño Apropiado para la Edad

Descripción general de la regulación: El Código de la Infancia (o el Código de Diseño Apropiado para la Edad) contiene 15 estándares que los servicios en línea deben seguir para proteger los datos de los niños en un entorno en línea.

Aplicabilidad: El código se aplica a los servicios de información que probablemente serán accesibles para los niños (incluidas aplicaciones, programas, motores de búsqueda, plataformas de redes sociales, mercados en línea, juegos en línea, servicios de transmisión de contenido y cualquier sitio web que ofrezca otros bienes o servicios a los usuarios a través de internet).

Algunos requisitos notables incluyen:

- Aplicación apropiada según la edad: Adoptar un enfoque basado en riesgos para reconocer la edad de usuarios individuales y garantizar la aplicación efectiva de los estándares estipulados en el Código a usuarios infantiles;

- Transparencia: Proporcionar un lenguaje conciso, prominente y claro, adecuado a la edad del niño, sobre la información de privacidad y otros términos, políticas y estándares comunitarios publicados;

- Configuraciones predeterminadas: Establecer la configuración de privacidad en alta por defecto, a menos que haya una razón convincente y demostrable para una configuración diferente;

- Minimización de datos: Recoger y retener solo la cantidad mínima de información personal que la empresa necesita para proporcionar elementos del servicio en el que un niño esté activa y conscientemente involucrado.

Enlace: Código de Diseño Apropiado para la Edad

Estado: Este código entró en vigor el 2 de septiembre de 2020, con un período de transición de 12 meses.

Exenciones, si las hay: Los requisitos no se aplican a algunos servicios proporcionados por autoridades públicas; sitios web que solo proporcionan información sobre un negocio o servicio del mundo real; servicios tradicionales de telefonía de voz; servicios de difusión general; y servicios preventivos o de asesoramiento.

Sancciones aplicables: La aplicación se lleva a cabo bajo el GDPR del Reino Unido y las Regulaciones de Privacidad y Comunicaciones Electrónicas. Los servicios en línea pueden recibir avisos de evaluación, advertencias, reprimendas, avisos de aplicación y avisos de penalización. Por infracciones graves de los principios de protección de datos, se pueden imponer multas del GDPR (hasta €20 millones o 4% del volumen de negocios anual mundial), lo que sea mayor.

Autoridad Supervisora:

Oficina del Comisionado de Información (ICO)

Irlanda

Fundamentos para un Enfoque Orientado a Niños en el Procesamiento de Datos

Resumen de la regulación

Los fundamentos para un Enfoque Orientado a Niños en el Procesamiento de Datos son un conjunto de estándares que los servicios en línea deben seguir para proteger los datos de los niños en un entorno en línea. El estándar detalla los 14 principios fundamentales que deben seguirse.

Aplicabilidad:

La Comisión de Protección de Datos considera que las organizaciones deben cumplir con los estándares y expectativas establecidos en estos fundamentos cuando los servicios proporcionados por la organización están dirigidos a, destinados a, o probablemente sean accedidos por niños.

Algunos requisitos notables incluyen:

- Piso de protección: Los proveedores de servicios en línea deben proporcionar un "piso" de protección para todos los usuarios a menos que adopten un enfoque basado en riesgos para verificar la edad de sus usuarios, de manera que las protecciones conferidas en este Estándar se apliquen a todo procesamiento de datos de niños.

- Consentimiento claro: Cuando un niño haya dado su consentimiento para el procesamiento de sus datos, ese consentimiento debe ser otorgado libremente, específico, informado y sin ambigüedades, realizado mediante una declaración clara o acción afirmativa.

- Conozca su público: Los proveedores de servicios en línea deben tomar medidas para identificar a sus usuarios y asegurarse de que los servicios dirigidos a, destinados a, o que probablemente sean accesibles por niños, cuenten con medidas de protección de datos específicas para niños en su lugar.

- Las edades mínimas de los usuarios no son una excusa: Los umbrales de edad teóricos para acceder a los servicios no desplazan las obligaciones de las organizaciones de cumplir con las obligaciones del controlador bajo el RGPD y los estándares y expectativas establecidos en este Estándar donde se trate de usuarios "menores de edad".

Enlace:

Fundamentos para un Enfoque Orientado a Niños en el Procesamiento de Datos

Sanciones aplicables:

- No proporcionado ya que esta no es una ley independiente.

- Las violaciones de cumplimiento se aplicarían probablemente bajo el RGPD.

Autoridad supervisora:

Comisión de Protección de Datos

2. Perspectivas procesables para tu negocio

Familiarizarse con leyes y regulaciones:

Para allanar el camino hacia el cumplimiento, las empresas deben familiarizarse primero con las leyes y regulaciones relevantes en las regiones donde operan. Esto incluye comprender el alcance, los umbrales de edad específicos para diferentes tipos de contenido, las medidas autorizadas de verificación de edad y las sanciones por incumplimiento. Un asesor legal informado puede proporcionar orientación valiosa en este área.

Evaluar prácticas actuales

Se recomienda que las empresas evalúen sus prácticas actuales e identifiquen posibles brechas en la verificación de edad. Este proceso abarca desde revisar el diseño del sitio web y los procesos de registro de usuarios hasta controles de acceso específicos al contenido. Es importante recordar que la verificación de edad no es una solución única; lo que funciona para una empresa en una jurisdicción puede no funcionar para otra.

Aplicar sistemas de verificación de edad

La implementación de sistemas de verificación de edad robustos es un paso crucial. Estos sistemas pueden ser muy diferentes, siendo formas simples, como la auto-declaración, cada vez menos atractivas. Mientras tanto, métodos más sofisticados, como los servicios de verificación de identidad, se han vuelto más adecuados para fines de cumplimiento. Aunque las soluciones de verificación de edad robustas suelen requerir más recursos, ofrecen mayor precisión y fiabilidad, y las empresas informadas deben sopesar los costos y beneficios de los diferentes sistemas para determinar la opción más adecuada.

Capacitar al personal interno

Formar al personal interno sobre la verificación de edad es igualmente importante. Los empleados deben entender la importancia de la verificación de edad, saber cómo usar los sistemas y poder manejar cualquier problema que surja, especialmente aquellos que están contemplados en las leyes o regulaciones aplicables. Las sesiones de formación regular pueden ayudar a asegurar que el personal esté al día con las prácticas y regulaciones más recientes.

Mantenerse actualizado

Finalmente, las empresas deben siempre aspirar a mantenerse actualizadas, lo que implica revisiones y actualizaciones regulares de sus prácticas de verificación de edad. A medida que las leyes y tecnologías evolucionan constantemente, las empresas necesitan mantenerse al tanto de estos cambios para seguir siendo cumplidoras y eficientes. Las auditorías regulares pueden ser útiles y ayudar a identificar cualquier problema temprano y permitir una rectificación oportuna.

3. ¿Cómo puede ayudar Veriff?

Veriff opera a nivel global, y nuestra verificación de edad en línea permite a nuestros clientes asegurar la capacidad de una persona para acceder a contenido restringido con poco o ningún esfuerzo. Veriff cuenta con un arsenal de soluciones de verificación de edad que se pueden utilizar para comprobar que la persona ha alcanzado la edad requerida. Por ejemplo, la validación de edad utiliza documentos de identidad emitidos por el gobierno, como una cédula de identidad o licencia de conducir, o la estimación de edad que permite a los usuarios verificar su edad sin requerir documentos de identidad. Esto significa que nuestros clientes pueden cumplir con las leyes existentes que exigen la implementación de sistemas de verificación de edad y adelantarse a la curva.

La solución de Validación de Edad de Veriff permite a su negocio confirmar sin problemas si los usuarios están por encima de un umbral mínimo de edad. Elija conceder acceso a usuarios apropiados para su edad mientras que los usuarios menores son automáticamente rechazados.

Veriff’s Age Estimation solution uses facial biometric analysis to estimate a user’s age without requiring the user to provide an identity document. This low-friction solution will enable you to convert more users faster and make it easy for businesses to verify any users who want to access age-gated products or services but who do not want to share their identity documents, possibly for privacy or ethical reasons.

Las soluciones de verificación de edad de Veriff están en una posición única para apoyar a plataformas como SuperAwesome en sus esfuerzos por crear un entorno en línea más seguro para los niños. Al integrar sin inconvenientes las herramientas de validación y estimación de edad de Veriff, SuperAwesome puede asegurar que solo los usuarios mayores del umbral de edad requerido accedan a sus plataformas, mejorando efectivamente las medidas de seguridad. Esta colaboración permite a SuperAwesome mantener su compromiso de salvaguardar a las audiencias jóvenes mientras proporciona a los desarrolladores las herramientas necesarias para cumplir con los requisitos regulatorios y fomentar una comunidad en línea segura.

Más información

Recibe las últimas noticias de Veriff. Suscríbete al boletín.

Veriff solo usará la información para compartirte noticias del blog.

Puedes cancelar la suscripción en cualquier momento. Lee nuestro Declaración de privacidad.