Key takeaways from the Veriff Deepfake Deep Dive Report

Discover the true threat of generative AI and how to cultivating a fraud-prevention ecosystem is the only way to keep your businesses safe

Chris Hooper

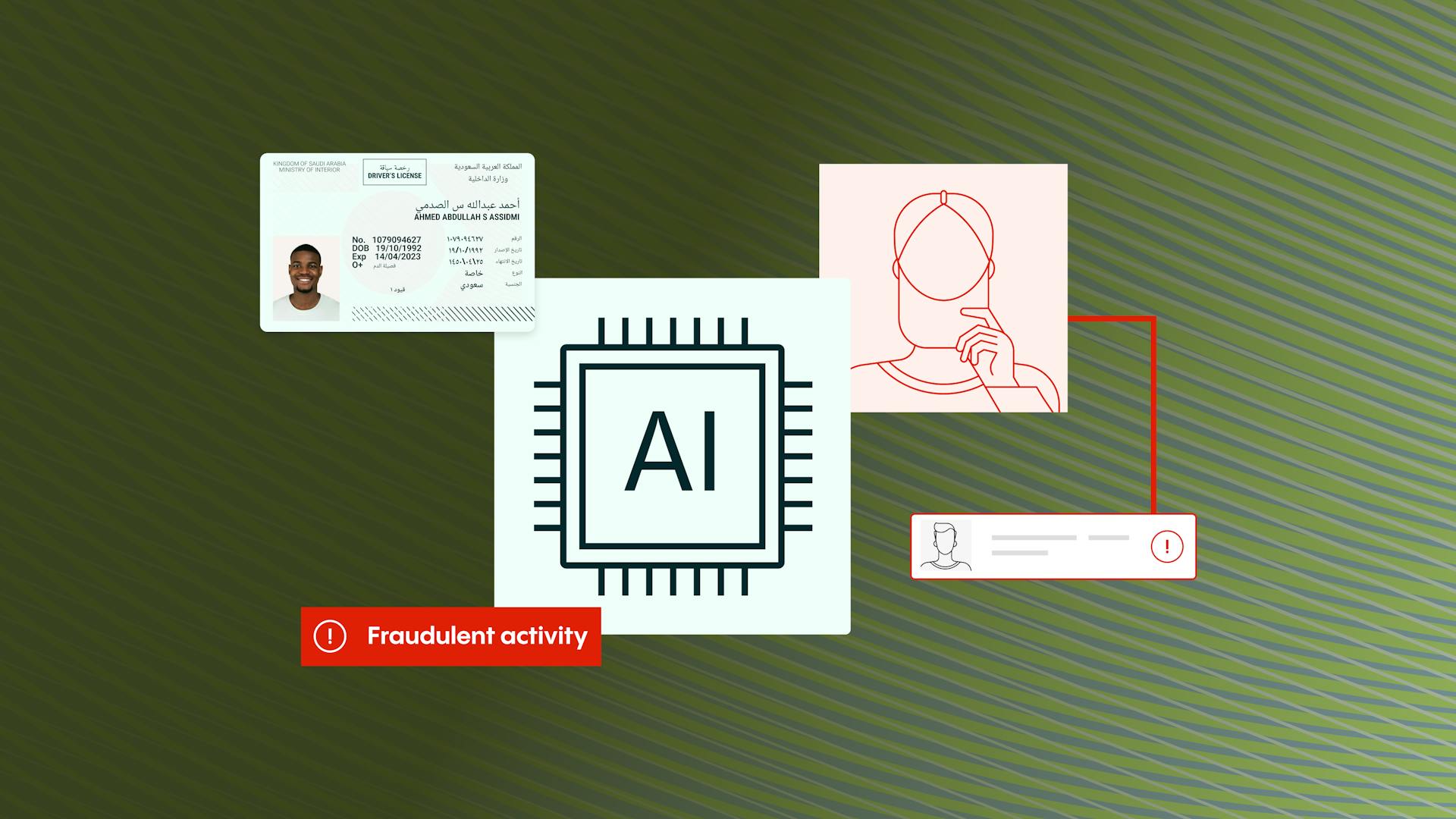

1. The AI threat is becoming more sophisticated

Artificial intelligence (AI) is transforming the digital economy – and presenting new opportunities for fraudsters. How can fraud experts fight back and protect their businesses from the evolving danger?

The Veriff Deepfake Deep Dive Report explores this issue in detail - outlining the real extent of the AI threat to businesses and, crucially, what can be done to fight back.

Here are the key takeaways:

The AI threat is becoming more sophisticated.

Fabricated documents have been a longstanding security challenge for online platforms. However, generative AI has turbocharged the threat, enabling criminals to create fake and deepfake documents, video, and audio content that is far more convincing than ever before, with about 500,000 voice and video deepfakes shared around the world in 2023.

The quality and sophistication of the threat is fueled by generative AI. These models group algorithms into artificial neural networks that mimic the structure of the human brain. They can then be trained at a “deeper” level to make complex, non-linear correlations between large amounts of diverse and unstructured data.

As a result, deep learning models can learn independently from their environment and from past mistakes to perform tasks to an extremely high standard – including creating fake audio and video. For example, there are models that mimic the generation of new images and text that look real. They learn from real-world images and texts and generate something that humans cannot distinguish as being real or generated by AI.

2. The most common AI threats you need to know about

We have identified five common techniques adopted by fraudsters as they deploy AI and deep learning in their activities. These are:

- Face swaps, when one person’s face is superimposed onto a photo of someone else

- Lip syncing, where algorithms map a voice recording of audio content to a video of someone saying something else

- Puppets, where the desired movements of an actor are overlaid onto the target subject, meaning the ‘puppet’ seems to move like the ‘master’

- GANS and Autoencoders: GANs are trained on internet and social media data in facial expressions and movements. They are then combined with an autoencoder to create a fictitious digital avatar

- Although technically not using generative AI, fake documents can be generated by using templates, photo-editing tooling, and scripts to create real-looking documents.

We’ll examine these techniques in more detail in Veriff Deepfake Deep Dive Report report.

3. The impact of deepfakes

Deepfakes are especially effective when deployed against enterprises with disjointed and inconsistent identity management processes and poor cybersecurity. They can be used both to attack existing accounts and to fraudulently open new ones.

The most common results we are seeing include:

- Opening fraudulent accounts: Synthetic identities and faked documents are increasingly used to pass biometric checks during the Know Your Customer (KYC) stage of the onboarding process

- Account takeover (ATO): Sophisticated deepfakes are combined with other hacking techniques to gain access to existing accounts

In this report we will examine in detail how the AI and deepfake threat is evolving and what businesses can do to make sure they and their users are protected.

4. There is no silver bullet

To stay ahead of fraudsters, you need a constantly evolving, multi-layered approach that combines a range of threat mitigation tools. Unfortunately, there is no one-size-fits-all solution to combating identity fraud, especially when bad actors employ the kind of sophisticated deepfake technology that is increasingly available.

Instead, you need to employ a coordinated, multifaceted approach that combines stringent checks on information supplied with a range of contextual information.

This should include:

- Robust and comprehensive checks on asserted identity documents

- Examination of key device attributes

- Treating the absence of data as a risk factor in certain circumstances

- Counter-AI to identify manipulation of incoming images

- Actively looking for patterns across multiple sessions and customers

- Biometric analysis of supplied photographic and video images

For Veriff, the first line of defense is our automated technologies. If AI cannot determine if a document is real or not, we then send it to human experts for their analysis.

Our layered defenses analyze the person, their asserted identity document, network and device, minimizing the risk of manipulation and ensuring only trusted, genuine people gain access to your services. What’s more, the mechanisms we use to detect fraud (including deepfakes) are not visible to the user and as such are harder to reverse engineer.

Leran more