Fraud Article

UK AI Regulation 2024: Balancing innovation and risks in finance

As the UK’s AI landscape rapidly evolves, regulators must balance nurturing innovation with managing risks. With a focus on growth and light-touch oversight, the UK is investing significantly in AI. But what does this mean for the financial sector, where opportunities and risks abound? Explore how this approach could reshape the future of finance.

As artificial intelligence (AI) continues to shape various sectors, the UK is uniquely positioned. The nation’s AI sector is thriving (according to the UK´s AI sector study), with rapid healthcare, transport, and economic productivity advancements. Yet, the regulatory landscape around AI remains relatively light. While the UK government acknowledges the need to oversee AI’s growth and potential risks, its approach favours innovation over stringent control.

A pro-innovation approach to AI regulation

In March 2023, the UK’s Department for Science, Innovation, and Technology unveiled a white paper titled “AI Regulation: A Pro-Innovation Approach”. This document outlines the government’s intention to regulate AI in a way that fosters innovation. The white paper emphasizes AI’s potential to revolutionize key areas, such as healthcare and economic productivity, while also recognizing the inherent risks associated with technology, such as ethical concerns, operational resilience, and transparency.

Instead of drafting comprehensive AI laws, the UK government is empowering existing sector-specific regulators. These regulators will be tasked with addressing AI risks based on five guiding principles:

Safety, security, and robustness

- AI applications must operate securely, safely, and robustly, with risks carefully managed.

Transparency and explainability

- Organizations developing and deploying AI should communicate its usage and provide appropriate detail about the system’s decision-making process according to the associated risks.

Fairness

- AI usage should adhere to existing UK laws, such as the Equality Act 2010 or UK GDPR, ensuring that it neither discriminates against individuals nor leads to unfair commercial outcomes.

Accountability and governance

- Measures should be in place to ensure adequate oversight of AI use and clear accountability for its outcomes.

Contestability and redress

- There should be clear avenues for individuals to challenge harmful outcomes or decisions made by AI.

This framework allows regulators to tailor AI governance to their sectors, ensuring a more adaptive and less burdensome approach. The key aim is to avoid “heavy-handed legislation” that could hinder technological advances. However, regulators will be expected to ensure compliance with these principles, offering guidance to companies in their respective sectors.

FCA’s role in AI regulation for financial services

In April 2024, the Financial Conduct Authority (FCA), which oversees the financial services sector in the UK, issued an AI update. The FCA acknowledged the increasing integration of AI in financial services, particularly fintech, and highlighted the emerging risks surrounding operational resilience, outsourcing, and critical third parties.

As AI usage grows in finance, companies are expected to take a proactive approach to managing these risks. The FCA stressed its commitment to working with firms to better understand their AI practices and address potential pitfalls. Companies will likely face increasing scrutiny, particularly regarding how they deploy AI and how it affects customers and the broader market. For financial institutions, this means a heightened focus on ensuring that AI systems are transparent, fair, and secure.

The path forward: gaps in AI regulation and the potential for future legislation

Despite this pro-innovation stance, gaps may still arise between the approaches taken by different regulators. A patchwork of AI regulatory approaches could emerge, creating inconsistencies across industries. To address this, the UK government has left the door open for more comprehensive legislation in the future, should the current framework prove insufficient.

One area where regulation could intensify isAI models and model providers. Discussions around stricter oversight for these areas have gained momentum since the new governmenttook office. This move could pave the way for the UK to adopt more centralized regulations (we could call it the “UK AI Bill”), potentially bringing the country closer to the EU’s AI Act, as part of broader efforts to strengthen UK-EU relations.

Recommendations for AI regulation in the UK’s financial sector

As the UK government continues to assess its AI regulatory framework, financial services firms must stay informed and adaptive. The relatively light regulatory approach gives companies flexibility but also places greater responsibility on them to ensure their AI systems meet ethical and operational standards.

Looking forward, financial services organizations must monitor the evolving regulatory landscape and be prepared for potential changes. Whether the UK introduces more stringent AI regulations or continues with its sector-based approach, the emphasis on operational resilience, fairness, and transparency in AI will remain key concerns for the FCA and other regulators.

In conclusion, while the UK’s AI regulation is currently light, the landscape is evolving. Financial services firms must remain agile, proactive, and ready to navigate both the opportunities and risks AI presents as regulation catches up to innovation.

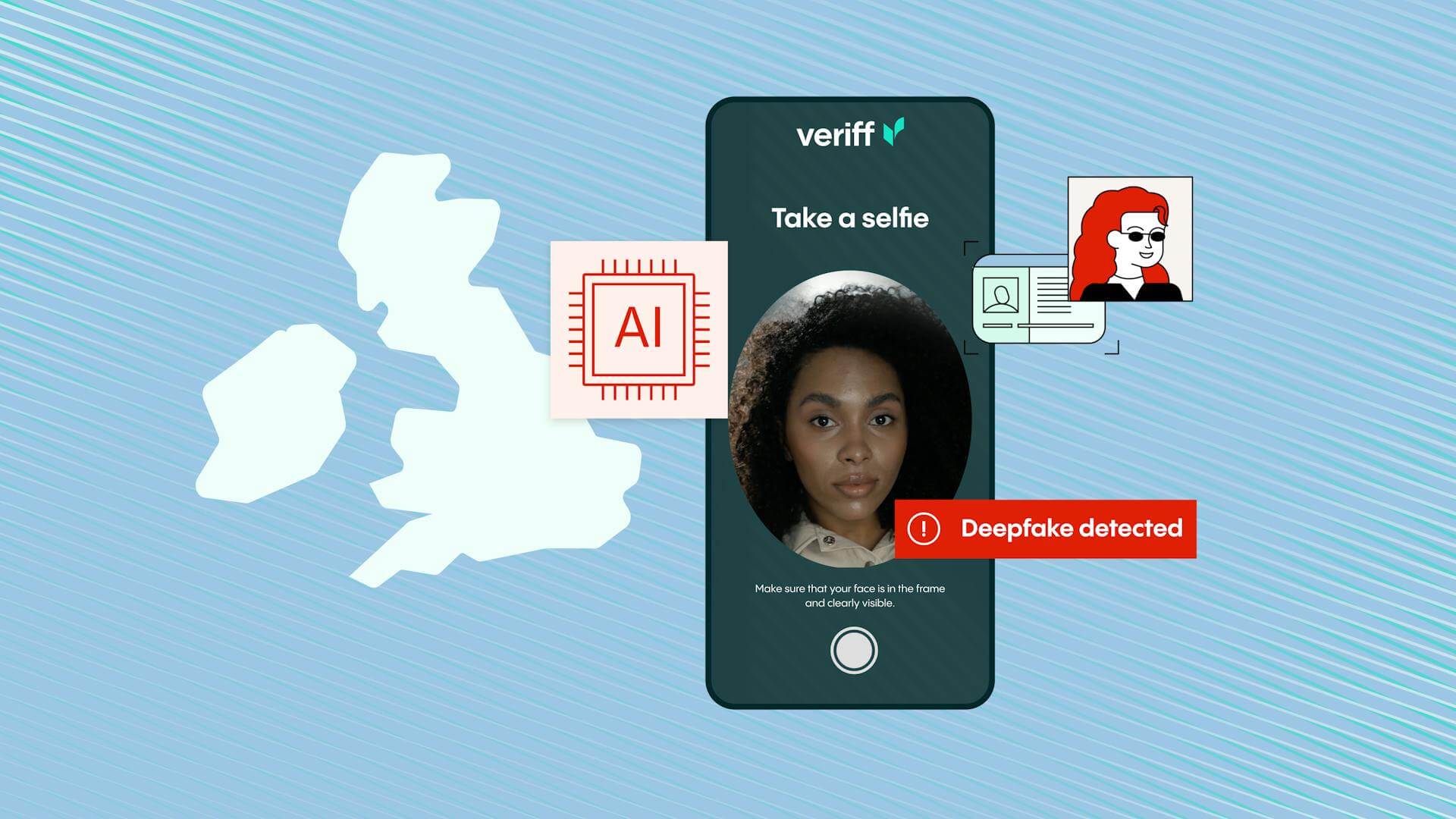

How Veriff can help?

1. AI-powered Identity Verification solution

Veriff’s AI-driven IDV solution allows financial institutions to securely verify customer identities while meeting regulatory requirements around transparency, fairness, and accountability. By automating the verification process, Veriff helps institutions streamline operations, reduce human error, and ensure compliance with AI.

2. Ensuring fairness and security

Veriff’s technology is designed to detect fraud, ensure data privacy, and mitigate risks – all that considering matters related to the services’ operational resilience and outsourcing requirements.

3. Adaptable to sector-specific regulations

As the UK takes a sector-based approach to AI regulation, Veriff’s solutions are flexible and can be adapted to meet the specific regulatory requirements of different industries. For financial services firms, Veriff ensures that AI technologies align with sector-specific principles, such as safety, security, and accountability.

4. Transparency and explainability in AI

With increasing scrutiny on AI models and their impact, Veriff offers transparency in how AI is applied to identity verification processes. Our technology is explainable, enabling financial services firms to demonstrate compliance with principles of transparency, and ensuring trust in AI-driven operations.

5. Contestability and redress

As Veriff provides a B2B service, our customers always have a clear avenue to address any decision they may consider unexplainable or questionable.