Fraud Article

The European Union AI Act: Introduction to the first regulation on artificial intelligence

The use of artificial intelligence in the European Union (EU) will be regulated by the EU AI Act, the world’s first comprehensive AI law. Find out how it works.

The European Union´s Artificial Intelligence Act explained

Technology and artificial intelligence (AI) advances have raised the call for protective regulation to prevent risks and harmful outcomes for populations across the globe. One place where these rules have taken shape is Europe. In April 2021, the European Union´s Commission proposed the first comprehensive framework to regulate the use of AI. The EU’s priority is ensuring that AI systems used in the EU are safe, transparent, traceable, non-discriminatory, and environmentally friendly.

At Veriff, we´re constantly developing our biometric identification solutions. We use AI and machine learning to make our identification process faster, safer, and better. We operate globally, and our legal teams continually monitor the various local legal landscapes, so we are perfectly positioned to navigate these regulations.

1. What is the EU’s AI Act?

The AI Act is a European law on artificial intelligence (AI) – the first by a major economic power globally.

It was proposed by the EU Commission in April 2021.

Like the EU’s General Data Protection Regulation (GDPR) in 2018, the EU AI Act could become a global standard, determining to what extent AI has a positive rather than negative effect on your life wherever you may be. The EU’s AI regulation is already making waves internationally.

2. Is the EU’s AI Act already adopted and in force?

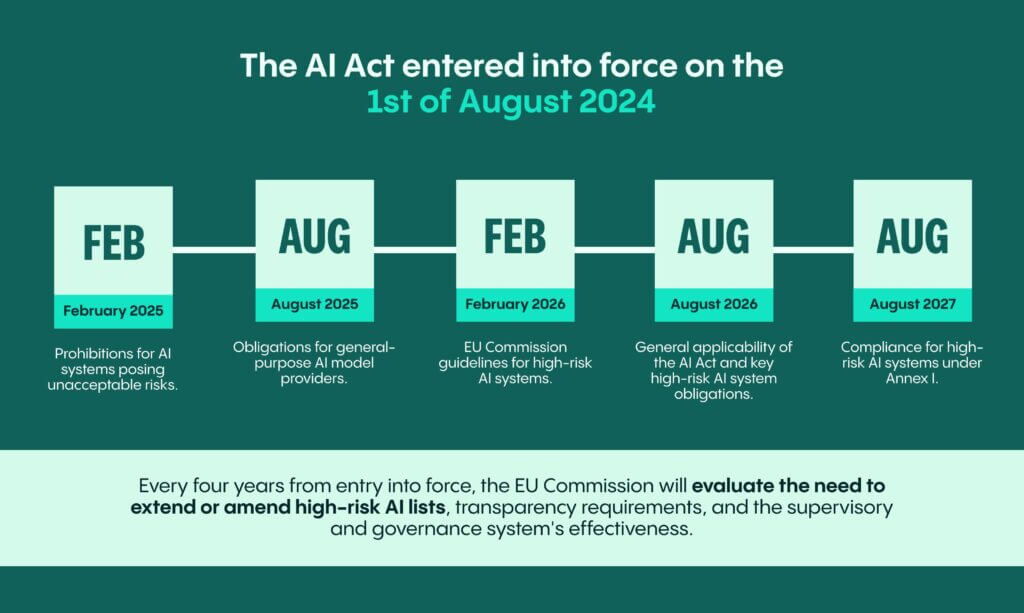

Yes, the AI Act entered into force on the 1st of August 2024. This marks the countdown to many other AI Act milestones.

For most businesses, those are the important dates to keep in mind:

- By February 2025, 6 months after enforcement, prohibitions for AI systems posing unacceptable risks (referred to as “prohibited AI systems”). This means that by that time companies would need to understand whether or not the systems they provide or use would qualify as prohibited and make adjustments, as needed.

- By August 2025, 12 months from the entry into force, obligations for general-purpose AI model providers apply together with the EU Commission’s first annual review of the lists on prohibited AI systems and high-risk AI systems under Annex III of the AI Act. Annex III and Annex I are key annexes to the AI Act based on which high-risk AI system designation is built.

- By February 2026, 18 months from the entry into force, the EU Commission must provide guidelines specifying practical implementation for the classification of systems as high-risk AI systems. This will be an important milestone and hopefully clarifying multiple gray areas for many businesses.

- In August 2026, 24 months from entry into force, the AI Act becomes generally applicable together with key high-risk AI system obligations connected with Annex III of the AI Act. It is important to note that in case a system has been put into service or onto the market before August 2026 then the obligations connected to high-risk AI systems apply only if from August 2026 those systems are subject to significant changes in their designs.

- In August 2027, 36 months from entry into force, the high-risk AI systems under Annex I will need to comply with obligations deriving from the AI Act.

The AI Act now includes regular evaluations to keep it relevant and up-to-date. It has introduced a periodical evaluation system to ensure ongoing relevance and updates. Every four years from the entry into force and thereafter, the EU Commission will assess the need to extend or add items to high-risk AI lists, amend transparency requirements, and review the supervisory and governance system’s effectiveness. The AI Office’s function, standardization process, and voluntary codes of conduct will also be evaluated.

3. How will the EU regulate with the AI Act?

The AI Act is proposed following these objectives:

- ensure that AI systems placed on the EU market and used in the EU are safe and respect existing laws on fundamental rights and Union values;

- ensure legal certainty to facilitate investment and innovation in AI;

- enhance governance and effective enforcement of existing law on fundamental rights and safety requirements applicable to AI systems;

- facilitate the development of a single market for lawful, safe, and trustworthy AI applications and prevent market fragmentation.

- The EU will regulate the AI systems based on the level of risk they pose to a person’s health, safety, and fundamental rights. That approach should tailor the type and content of such rules to the intensity and scope of the risks (high risk or minimal risk) that AI systems can generate. The law assigns applications of AI to three risk categories.

- First, applications and systems that create an unacceptable risk, such as untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases, government-run social scoring, AI systems, or applications that manipulate human behavior to circumvent users’ free will, are banned.

- Second, high-risk AI systems, potentially such as systems to determine access to educational institutions or for recruiting people, are subject to specific and thorough legal requirements, including risk-mitigation systems, high-quality data sets, logging of activity, detailed documentation, clear user information, human oversight, and a high level of robustness, accuracy, and cybersecurity.

- Applications not explicitly banned or listed as high-risk are regulated lightly. Those systems are called minimal risk systems, and that would be a category into which the vast majority of AI systems would presumably fall. The key requirement here is transparency – in case an AI system is designed to interact with a person, then it must be made known that the person is interacting with an AI system unless it is clearly obvious that the interaction is being done with an AI system. Also, providers whose AI systems generate or manipulate output in the form of audiovisual content and text shall have respective markings.

- A separate set of rules are introduced for general-purpose AI models.

In the following sections, we will examine the regulation around prohibited AI systems, high-risk AI systems, and general-purpose AI models.

Talk to us

Talk to one of Veriff’s fraud experts to see how IDV can help your business.

4. The AI Act contains:

- Information about its scope and applicable definitions for participants in the AI lifecycle and ecosystem

- Sets forth prohibited AI systems

- Regulates thoroughly high-risk AI systems

- Measures supporting innovation (e.g., regulatory sandboxes)

- Governance and implementation of the AI Act and codes of conduct

- Regulation around fines

5. To whom does the AI Act apply?

- Providers of AI systems placing on the market or putting into service AI systems in the EU, irrespective of whether those providers are established within the EU or in a third country (e.g. US);

- Deployers of AI systems located within the EU;

- Providers and users of AI systems that are located in a third country, but where the output produced by the system is used in the EU or the system affects people in the EU.

6. How to ensure compliance with the AI Act?

It is advised to closely monitor the developments around the AI Act to stay up to date with all the relevant guidance to be issued. However, here are some tips to share:

- Work your way through the text – your organization needs to pinpoint where in the AI “value chain” your organization sits. For example, being a provider or a user of AI systems subjects you to potentially different obligations. This will need to be coupled with mapping where your organization makes use of AI systems and models.

- After you have completed the mapping, work your way through the systems to understand risk-designation under the AI Act and obligations connected with it. Use outside technical and legal help, if needed.

- Work with your Legal, Risk, Quality, Engineering/Product development departments to identify risks around AI usage in general.

- There will be an extensive creation of respective technical and non-technical standards – either by the European Standardisation Organisations and/or by the European Commission by engaging experts. The work around those is worth monitoring. Some standards already exist for understanding certain obligations which concern the products identified earlier as high-risk AI systems. Also, there are already standards regarding quality management and risk management systems. They give a baseline to what must be considered in case you fall into the high-risk AI provider category.;

7. AI Act enforcement and penalties

Fines under the AI Act would range from €35 million or 7% of global annual turnover (whichever is higher) for violations of prohibited AI applications, €15 million or 3% for violations of other obligations, and €7.5 million or 1.5% for supplying incorrect information. More proportionate fines are foreseen for SMEs and start-ups in case of infringements of the AI Act.

Talk to us

Talk to one of Veriff’s fraud experts to see how IDV can help your business.