Fraud Article

The impact of AI and deepfakes on US political campaigns: Entering a new era

Are you ready for the biggest election year on record? Dive into the critical issues of identity verification and fraud concerns that could shape the outcome!

Throughout American history, instances of election fraud have been well-documented by historians and journalists. One of the most infamous examples is Tammany Hall, a political organization in 19th-century New York City, led by figures like Boss Tweed.

From the intimidation of voters to the misuse of proxy voting, vote buying, misleading ballot papers, ballot stuffing, vote miscounting, destruction of votes, and the artificial announcement of results, the common types of election fraud are many. The sophistication of AI intensifies these fraudulent activities, necessitating stricter vigilance and regulatory measures to uphold the principles of free and fair elections.

2024 will be a monumental election-year globally, with at least 64 countries representing a combined population of about 49% of the world heading to vote. The 2024 United States elections will also be a pivotal moment for the United States. AI technology, including AI-generated images and AI-powered tools, is expected to play a significant role in shaping political campaigns, voter engagement, and potentially the outcomes. However, despite anticipation, there are growing concerns about the integrity of these elections, as the intersection of generative AI advancements and vulnerabilities within election systems has cast a shadow on the process.

Leveraging AI for enhanced political campaigns and election security

While AI can be a powerful tool for engaging voters and personalizing campaign strategies, it raises misinformation and election security concerns. As AI technology continues to evolve, its applications in the political arena are becoming more sophisticated, influencing various aspects of the election process, including political ads and political micro-targeting.

This is most clearly seen in three main areas: the risks associated with remote voting, how deepfakes will impact the election process, and how voting patterns and election outcomes can be manipulated through fake images, fake news, and AI-generated content on social media platforms.

Risks of remote voting

Remote voting, while designed to promote participation globally, introduces a myriad of vulnerabilities. From the risks of coercion and identification fraud to compromised secrecy and the potential interception of votes by bad actors, the foundation of election integrity is at risk.

Manipulation could mean exploiting technological vulnerabilities and voter fraud cases. Heritage Foundation shares a sampling of recent proven instances of election fraud across the US, such as hacking into online voting systems, creating fake social media campaigns to influence voter opinions, and spreading misinformation to manipulate public sentiment.

In a shocking example, a team of Israeli contractors operating under the pseudonym “Team Jorge” was exposed for manipulating more than 30 elections worldwide. Led by Tal Hanan, a former Israeli special forces operative, the group used hacking, sabotage, and automated disinformation on social media to secretly interfere in elections for over two decades.

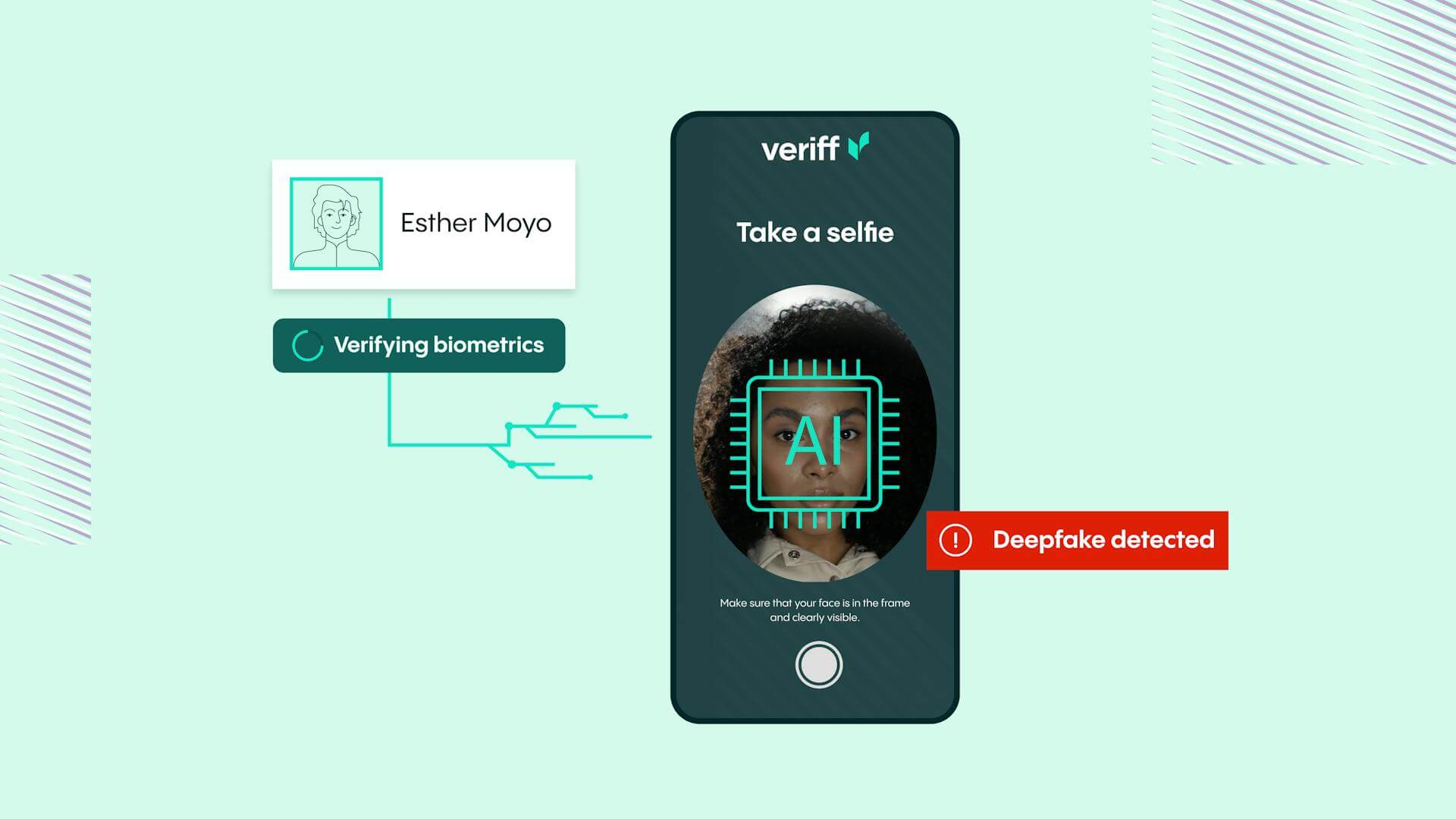

Emphasizing the need to consider adopting advanced identity verification solutions, such as biometrics, over outdated methods that can easily be manipulated. This ensures a more secure and trustworthy remote voting experience, safeguarding the integrity of elections in the digital age.

New era of deepfake threat

The Veriff Identity Fraud Report 2024 reveals a stark reality — generative AI has turbocharged the deepfake threat. Criminals now possess the tools to create AI-generated content, video, and voice content virtually indistinguishable from reality.

Recent incidents involving world leaders, including US President Biden’s voice and Ukraine’s Volodymyr Zelenskyy, who fell victim to tampered or fabricated deepfake content, underscore the need to address this threat to maintain credibility and trust among voters.

The rise in political conspiracy theories also poses a threat to workplace efficiency. Employers can proactively promote their employees’ civic engagement leading up to the 2024 general election by arming people with the tools to differentiate fallacy from accurate information. Deepfakes and AI-generated content can create convincing false narratives that undermine the integrity of the electoral process. AI, whether in textual, bot, audio, photo, or video formats, can depict candidates engaging in actions or uttering statements they never actually did. This can be used to tarnish their reputations or deceive voters.

Additionally, AI can amplify disinformation efforts by generating realistic-looking imagery, thereby causing confusion among voters. Election officials in the US are concerned that the increasing prevalence of generative AI in different forms could facilitate such assaults on the democratic process in the lead-up to the 2024 US November election. Due to this threat, they are actively seeking strategies to counteract the implications of AI. Kaarel Kotkas, Veriff’s CEO, emphasizes in his LinkedIn post the importance of addressing the root cause of digital fraud, rather than just its symptoms. This involves identifying the creators of deepfakes and fraudulent content. He advocates using properly verified online accounts to encourage critical thinking and protect against misinformation and scams.

Kotkas stresses the need for collaboration between technology companies and identity verifiers to verify the authenticity of users and tie their identities to online content for enhanced security:

“Tech companies and identity providers can work together to ensure that account owners, posters, and users are truly who they say they are, and that their identity is tied to content to ensure increased accountability and opportunity to question where the information is coming from.” He asserts that taking action now is crucial for the future growth of the digital economy, as scalable trust relies on verified online identities to avoid trust issues.”

Social media and fake news

According to the study of “Exposure to untrustworthy websites in the 2016 US election”, Facebook spreads fake news faster than any other social website. The deepfake phenomenon adds another layer to this study, with algorithms fostering echo chambers that amplify misinformation.

Former President Donald Trump stirred controversy during the run-up to the 2020 presidential elections by posting a report on his social media website, riddled with falsehoods about fraud in the elections. Criticized for rehashing unfounded election conspiracy theories and lacking credible evidence, this highlighted the challenges faced by Trump’s advisers in controlling his impulses and maintaining a consistent narrative on social media.

The prevalence of echo chambers and their potential role in increasing political polarization shouldn’t be ignored. Washington Post highlights the importance of discerning reliable sources, drawing on lessons learned from this 2020 election.

In the backdrop of the 2024 US elections, concerns surrounding deceptive content continue in the advent of AI. Recent events in New Hampshire exemplify the alarming ease with which AI-generated calls can discourage voter participation, underscoring broader concerns about disseminating AI-generated falsehoods online. Companies like OpenAI are seeing efforts to mitigate these challenges, but uncertainties persist regarding the effective enforcement of policies against deceptive AI usage. This situation raises questions about the continuous debates over social media policies, including questions about free speech and the management of political content. It also prompts considerations of legal and regulatory actions to address disinformation and foreign interference in elections.

In conclusion, AI holds both promising opportunities and significant challenges for the 2024 US elections. Its ability to process and analyze data through AI in political campaigns can revolutionize campaign strategies and voter engagement, but also requires stringent safeguards against misuse. Balancing these factors will be crucial for leveraging AI to enhance the democratic process while protecting it from potential threats.

Political consultants are urged to consider AI tools and large language models to make political campaigns more user-friendly, effectively persuading voters. Whether in the Hampshire primary or broader Republican campaigns, artificial intelligence has become an integral part of strategizing in modern political landscapes.depict candidates engaging in actions or uttering statements they never actually did. This can be used to tarnish their reputations or deceive voters.

Additionally, AI can amplify disinformation efforts by generating realistic-looking imagery, thereby causing confusion among voters. Election officials in the US are concerned that the increasing prevalence of generative AI in different forms could facilitate such assaults on the democratic process in the lead-up to the 2024 US November election. Due to this threat, they are actively seeking strategies to counteract the implications of AI.

How Veriff can help

With generative AI tools reaching mainstream adoption, the vulnerability of election systems to cyberattacks is unprecedented. In the face of evolving threats, businesses can take a proactive stance by embracing Veriff’s advanced identity verification solutions.

Kaarel Kotkas, Veriff’s CEO, emphasizes the importance of education and awareness about AI’s capabilities, encouraging individuals to be cautious of suspicious emails or unknown calls, especially when asked for sensitive information or money. He urges people to trust their instincts and ask questions when in doubt. At the same time, Kaarel calls on companies, particularly social media platforms, to take responsibility for verifying accounts to shield users from false content, which can impact elections. Trust, verification, and critical evaluation of sources are crucial for safeguarding democracy during this election season: “Trust, verification and being source-critical will be the key to maintaining the integrity and protecting the principles of democracy this election season.”

Trust, verification and being source-critical will be the key to maintaining the integrity and protecting the principles of democracy this election season.

We´re constantly developing our real-time remote biometric identification solutions, and we use AI and machine learning to make our identification process faster, safer, and better. We operate globally, and our legal teams continuously monitor the various local legal landscapes, so we are well-positioned to navigate new and changing regulations.

Veriff follows best practice guidance regarding fraud management. We take a multifaceted approach to combating deepfakes and AI-generated media.

One key strategy is implementing FaceCheck Liveness to validate the authenticity of human presence. This involves using advanced algorithms to mitigate the risk of impersonation fraud using digital manipulation or presentation attacks with passive liveness detection that requires no additional action from the user. In addition, we employ DocCheck to validate the legitimacy of documents. This includes leveraging machine learning algorithms to analyze document features and detect anomalies or signs of manipulation.

Our DeviceCheck helps combat synthetic identities, recurring fraud, multi-accounting, and velocity abuse by analyzing data points from the user’s device and network that could indicate fraud rings or other anomalies. It is also an integral part of our CrossLinks, which can more effectively identify and remove duplicate or ineligible registrations, reducing the potential for fraudulent voting.

CrossLinks enhances fraud detection capabilities in real-time to uncover fraud patterns that are not visible when looking at a single verification session in isolation. CrossLinks enables us to identify potentially synthetic identities and uncover fraud rings by highlighting potential links between different sessions. This is done by comparing document, device, network, and behavioral indicators and identifying high-risk sessions that may connect to previous sessions.

As part of our Fraud Protect t fraud mitigation package, Veriff also offers Velocity Abuse Prevention, a feature that is created to automatically prevent multi-accounting. By implementing measures to detect and prevent multi-accounting, election authorities can help ensure that each eligible voter can only cast one legitimate vote. This helps maintain the integrity of the electoral process and reduces the risk of fraudulent voting.

In addition to the identity verification fraud mitigation capabilities of Fraud Protect, which protects organizations against impersonation and synthetic identity fraud, identity document fraud, velocity abuse, and multi-accounting, Fraud Intelligence enables organizations to extract the valuable and actionable risk intelligence into their decision-making systems to build advanced and flexible counter-fraud strategies or perform further investigation on cases.

RiskScore is an integral part of Fraud Intelligence. It can and can be used as an additional factor in the voter verification process, ensuring that only legitimate voters are able to participate in the election. It is a numerical value representing the overall risk associated with an identity verification session. It is calculated based on the various signals generated throughout the end user’s IDV journey and further tuned by Veriff’s data science team using advanced machine-learning models.

Overall, the combination of AI-powered deepfakes and the potential for widespread disinformation poses a significant challenge to the integrity of US elections. Veriff can be an important part of the solution, helping to maintain trust in the electoral process and protect the fundamental principles of democracy.

In this report, we explore the rise of deepfake technology, its usage patterns, and, most importantly, how businesses can protect themselves from the credibility crisis it may induce.

FAQ

1. What is AI’s role in political campaigns?

AI helps campaigns analyze voter data, automate outreach, optimize digital ads, and predict voter behavior. It provides insights to target the right audiences with tailored messages.

2. How does AI help in voter targeting?

AI can analyze vast amounts of voter data, such as demographics, behavior, and preferences, to identify specific voter groups. Campaigns can then focus on the most relevant messages for each group, increasing engagement and turnout.

3. Can AI be used to create political ads?

Yes, AI can generate personalized political ads by analyzing data to create content that resonates with different voter segments. It can also optimize ad delivery by determining the best platforms and times to reach target audiences.

4. How does AI affect campaign fundraising?

AI helps streamline fundraising by analyzing donor data to identify patterns and predict which supporters are likely to donate. Campaigns can then send targeted messages and appeals to those most likely to contribute.

5. Is AI used in managing social media for political campaigns?

AI tools can automate social media monitoring, content posting, and engagement. They help campaigns stay responsive, track voter sentiment, and manage their online reputation more efficiently.

6. What are the legal limitations of using AI in political campaigns?

Legal regulations on AI usage in campaigns vary by country, and often focus on data protection, transparency in digital advertising, and ethical use of AI-generated content.

Stay Ahead of Fraud with Veriff

Access essential resources to protect your business and customers from fraud. Learn the latest fraud prevention techniques and industry insights at Veriff’s Fraud Education Centre.